Website

Website

Paper

Paper

Supplementary material

Supplementary material

BibTex

BibTex

Poster

Poster

Code

Code

Website

Website

Paper

Paper

Supplementary Material

Supplementary Material

BibTex

BibTex

Poster

Poster

Turk Dataset

Turk Dataset

Website

Website

Paper

Paper

BibTex

BibTex

Prior works on single view 3D reconstruction mostly relies on 3D model supervisions, which is impractical for real world applications.

Recently, there are some works focus on single view 3D reconstruction without using 3D supervisions. Inspired by this direction of work, we investigate

whether noisy 3D CAD models from commercial scanning device assist the problem of single view 3D reconstruction.

We also formed a paper reading group which covers most of the recent work of single view 3D reconstruction.

Please check our paper reading list.

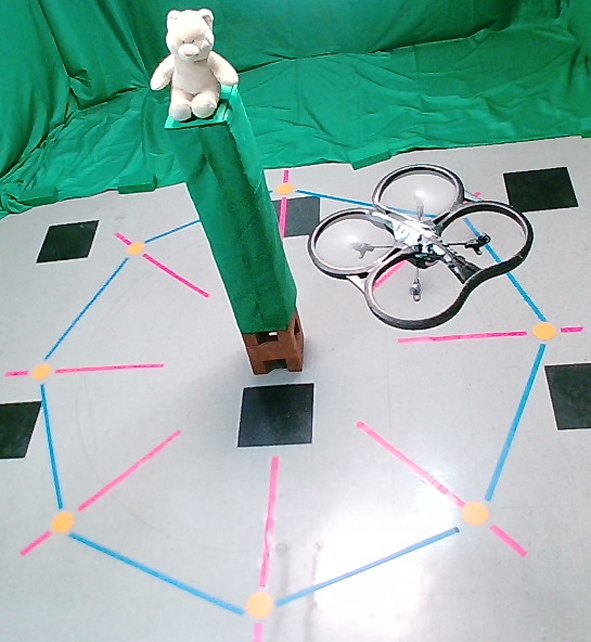

To investigate the problem of 3D reconstruction of real world objects, a new dataset containing 343 object scans are curated. A turntable along

with a camera is used to captured images of an object from multiple views. These views are then imported to Agisoft to construct real world 3D models.

In addition, in further extend the drone data collecion framework, we research various algorithms,

including using OpenCV CSRT Tracker to make Intel Drone to autonomously circulate objects.

We also formed a paper reading group which covers most of the recent work of 3D representations, including voxel, point cloud, mesh and primitives.

Please check our paper reading list.

The datset of OOWL and OOWL in the wild are collected which are later used in Catastrophic Child’s Play: Easy to Perform, Hard to Defend Adversarial Attacks and PIEs: Pose Invariant Embeddings respectively.