To Overcome the Long-tailed Problem in Computer Vision

Overview

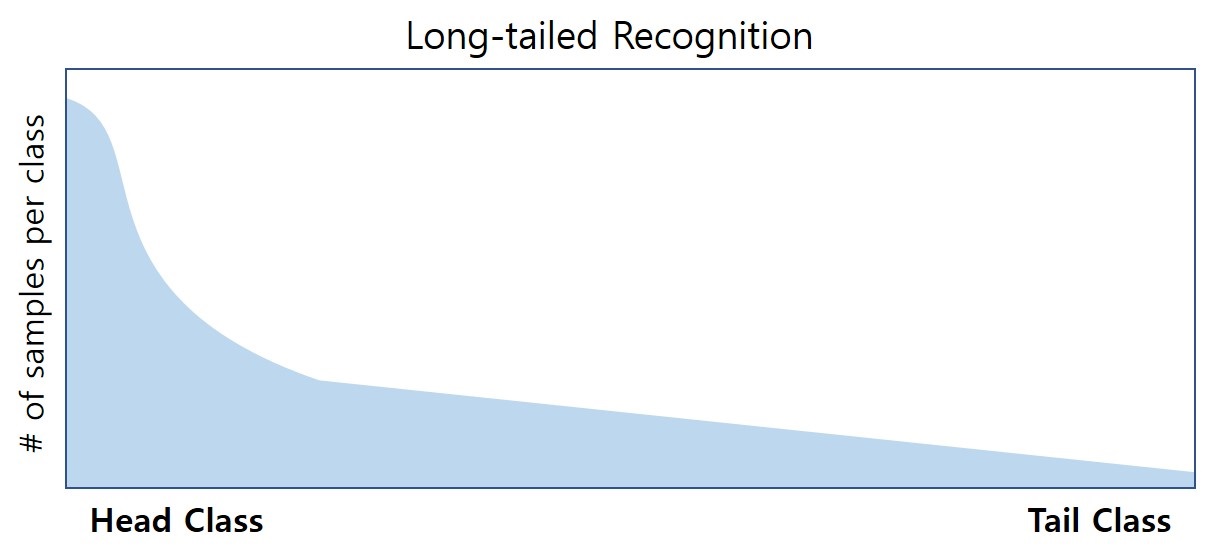

Computer vision is a scientific field that deals with how computers can gain high-level understanding from digital images or videos. From the perspective of engineering, it seeks to understand and automate tasks that the human visual system can do. To accommodate this goal, people have composed a lot of datasets from handwriting digits, human faces to real world images and videos. These datasets are collected and annotated carefully. People also calibrate those datasets to make sure that they have enough data on each of the category in the corresponding field. So that computer vision models can successfully learn the information from those datasets. However, real world images are not formulated as those ideal datasets. In fact, some objects are much more easier to find than others. This yields a long-tailed distribution of the samples from different categories. To overcome this, people have discussed different sampling strategies to train different part of the recognition model. In this project, we introduce three works. The first work enhances the few-shot performance by introducing semi-supervised learning on unlabeled data. The second, extends class-balanced sampling to adversarial feature augmentation. And the last combines the two training stages with geometric structure transfer.

Projects

Semi-supervised Long-tailed Recognition using Alternate Sampling

Main challenges in long-tailed recognition come from the imbalanced data distribution and sample scarcity in its tail classes. While techniques have been proposed to achieve a more balanced training loss and to improve tail classes data variations with synthesized samples, we resort to leverage readily available unlabeled data to boost recognition accuracy. The idea leads to a new recognition setting, namely semi-supervised long-tailed recognition. We argue this setting better resembles the real-world data collection and annotation process and hence can help close the gap to real-world scenarios. To address the semi-supervised long-tailed recognition problem, we present an alternate sampling framework combining the intuitions from successful methods in these two research areas. The classifier and feature embedding are learned separately and updated iteratively. The class-balanced sampling strategy has been implemented to train the classifier in a way not affected by the pseudo labels' quality on the unlabeled data. A consistency loss has been introduced to limit the impact from unlabeled data while leveraging them to update the feature embedding. We demonstrate significant accuracy improvements over other competitive methods on two datasets.

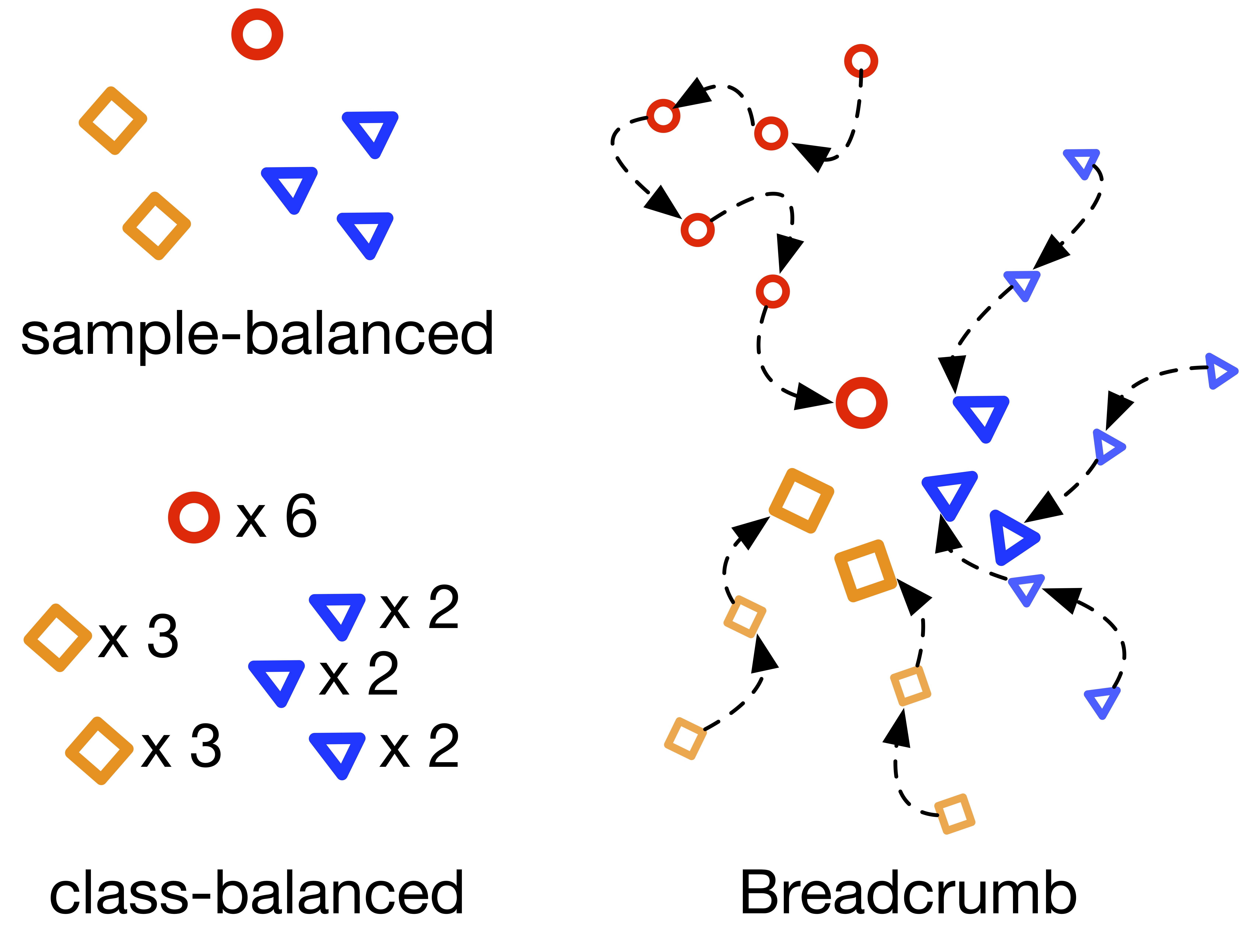

Breadcrumbs: Adversarial Class-Balanced Sampling for Long-tailed Recognition

While training with class-balanced sampling has been shown effective for long-tailed problem, it is known to over-fit to few-shot classes. It is hypothesized that this is due to the repeated sampling of examples and can be addressed by feature space augmentation. A new feature augmentation strategy, EMANATE, based on back-tracking of features across epochs during training, is proposed. It is shown that, unlike class-balanced sampling, this is an adversarial augmentation strategy. A new sampling procedure, Breadcrumb, is then introduced to implement adversarial class-balanced sampling without extra computation. Experiments on three popular long-tailed recognition datasets show that Breadcrumb training produces classifiers that outperform existing solutions to the problem.

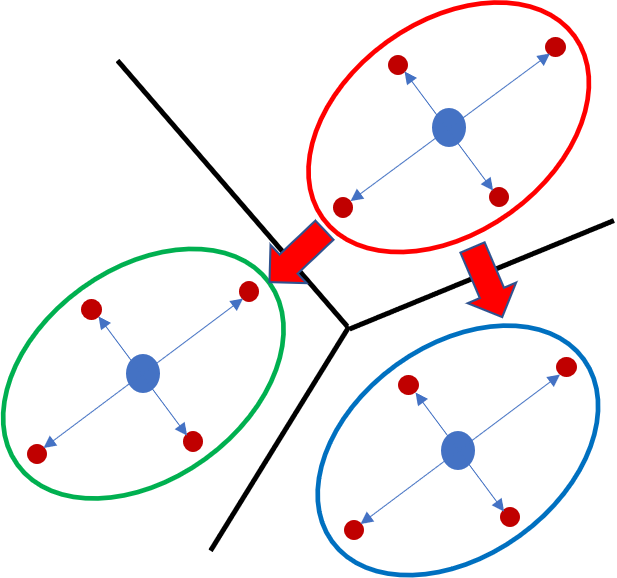

GistNet: a Geometric Structure Transfer Network for Long-Tailed Recognition

While two-stage training is effective in long-tailed proble, in this work we seek to solve the problem in one stage for the compatibility to other tasks. It is hypothesized that the well known tendency of standard classifier training to overfit to popular classes can be exploited for effective transfer learning. Rather than eliminating this overfitting, e.g. by adopting popular class-balanced sampling methods, the learning algorithm should instead leverage this overfitting to transfer geometric information from popular to low-shot classes. A new classifier architecture, GistNet, is proposed to support this goal, using constellations of classifier parameters to encode the class geometry. A new learning algorithm is then proposed for GeometrIc Structure Transfer (GIST), with resort to a combination of loss functions that combine class-balanced and random sampling to guarantee that, while overfitting to the popular classes is restricted to geometric parameters, it is leveraged to transfer class geometry from popular to few-shot classes. This enables better generalization for few-shot classes without the need for the manual specification of class weights, or even the explicit grouping of classes into different types. Experiments on two popular long-tailed recognition datasets show that GistNet outperforms existing solutions to this problem.

Publications

To Overcome Limitations of Computer Vision Datasets

Bo Liu

Ph.D. Thesis, University of California San Diego,

2021.

Semi-supervised Long-tailed Recognition using Alternate Sampling

Bo Liu, Haoxiang Li, Hao Kang, Nuno Vasconcelos, Gang Hua

submitted to IEEE International Conference on Computer Vision (ICCV),

2021.

Breadcrumbs: Adversarial Class-balanced Sampling for Long-tailed Recognition

Bo Liu, Haoxiang Li, Hao Kang, Gang Hua, Nuno Vasconcelos

submitted to IEEE International Conference on Computer Vision (ICCV),

2021.

GistNet: a Geometric Structure Transfer Network for Long-tailed Recognition

Bo Liu, Haoxiang Li, Hao Kang, Gang Hua, Nuno Vasconcelos

submitted to IEEE International Conference on Computer Vision (ICCV),

2021.

Acknowledgements

This work was partially funded by NSF awards IIS-1637941, IIS-1924937, and NVIDIA GPU donations.